How B-Trees Actually Work on Disk: From RAM to SSD

Why You Should Care About B-Trees and Storage Layers

B-Trees are one of the most fundamental data structures in systems engineering. You’ll find them powering the storage engines of relational databases (PostgreSQL, MySQL, SQLite), key-value stores, and even file systems like HFS+ and NTFS.

Understanding how B-Trees interact with storage layers—from fast RAM to slower SSDs—is crucial if you care about performance, storage efficiency, and system design. Disk I/O remains one of the biggest bottlenecks in data-intensive systems, and the way B-Trees handle reads, writes, and node splits can make or break throughput at scale.

This issue takes you through the lifecycle of a B-Tree operation: from memory, through buffer caches, down to the SSD. We’ll break down the node structure, I/O patterns, and optimizations that make B-Trees disk-friendly.

B-Trees Refresher — Not Just Another Binary Tree

A B-Tree is a self-balancing tree data structure that maintains sorted data and allows logarithmic time operations. It’s optimized for systems that read and write large blocks of data.

Key Properties:

Nodes contain multiple keys and child pointers.

All leaves appear at the same depth.

Internal nodes direct search paths based on key ranges.

B-Tree vs B+Tree:

B-Tree: Both keys and values can appear in internal and leaf nodes.

B+Tree: Only leaves store values; Internal nodes store keys for navigation. Leaves are often linked for fast range queries.

B+Trees are more common in database systems due to their better range scan performance.

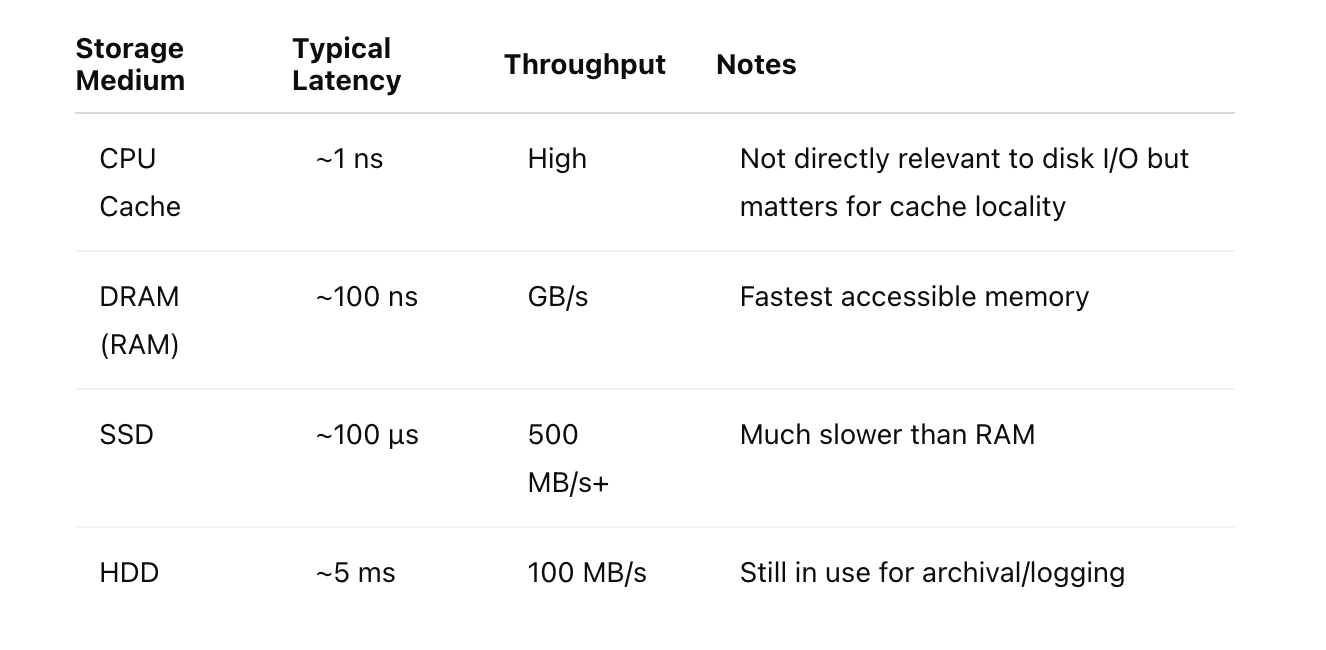

Memory Hierarchy Overview: RAM vs SSD vs Disk

Before we go further, it’s important to understand the cost of accessing data at different layers of the memory hierarchy:

When designing B-Trees for disk, we want to minimize disk I/O by ensuring that each operation touches as few blocks as possible.

Anatomy of a B-Tree Node on Disk

B-Tree nodes are often aligned with disk page sizes, commonly 4KB or 8KB. Here’s a simplified layout of a 4KB B-Tree node:

Header (e.g., 16 bytes): node type, number of keys

Keys (variable size): sorted list of keys

Pointers (offsets or block addresses): point to children (or values in leaf nodes)

Free space: reserved for inserts

Efficient page usage is critical. Too much free space means wasted storage; too little means frequent splits.

Searching, Insertion, and Deletion — Disk Edition

Search:

Start at root node (cached in memory if hot).

Binary search to find the key or next child pointer.

Follow pointer to child node (disk read if not in cache).

Repeat until leaf node is found.

Each level usually corresponds to a disk page. In a balanced B-Tree with 4KB pages and average fanout of 100, a tree 3-4 levels deep can index millions of entries with just 3-4 page reads.

Insertion:

Locate the leaf node.

Insert key in sorted order.

If node is full, split it and push a key up to the parent.

Splits may cascade up to the root.

Each split can involve a write to disk for both the old and new node, plus an update to the parent.

Caching and Buffer Pools: Keeping Hot Data in RAM

Database systems like MySQL or PostgreSQL don’t go to disk for every operation. They use buffer pools to keep frequently accessed pages in RAM.

Key Concepts:

Buffer Pool: Memory-resident cache of disk pages.

Page Replacement Policies: LRU, MRU, CLOCK.

Pinning: Prevents hot pages from being evicted.

This caching layer can dramatically improve performance, reducing the average I/O cost per query.

Case Studies from Production Systems

PostgreSQL:

Uses B+Trees for indexes.

Tuples contain item pointers.

Pages are 8KB with buffer pool caching.

MySQL (InnoDB):

Uses clustered indexes (B+Tree on primary key).

Secondary indexes reference primary key.

Pages are 16KB; includes doublewrite buffer to reduce corruption.

SQLite:

Entire DB is a B+Tree file.

Page cache configurable.

WAL mode improves write performance.

Final Thoughts

Understanding the internals of B-Trees across storage layers gives you a significant edge:

You can better diagnose performance bottlenecks.

You can tune configurations like page size or buffer pool size.

You understand when not to use a B-Tree (e.g., high write volume, append-only workloads).